What a difference a Gig makes

We’re working on a project at the moment that involves deploying various Linux services for visualising Oceanographic modelling data using tools such as Unidata’s THREDDS Data Server (TDS) and NOAA/PMELS’s Live Access Server (LAS). TDS is a web server for making scientific datasets available via various protocols including plain old HTTP, OPeNDAP which allows a subset of the original datasets to be accessed and WCS. LAS is a web server which, using sources such as an OPeNDAP service from TDS, allows you to visualise scientific datasets, rendering the data overlaid onto world maps and allowing you to select particular variables from the data which you are interested in. In our case, the datasets are generated by the Regional Ocean Modeling System (ROMS) and include variables such as sea temperature and salinity at various depths.

The data generated by the ROMS models we are looking at uses a curvilinear coordinate system – to the best of my understanding (and I’m a Linux guy, not an Oceanographer, so my apologies if this is a poor explanation) since the data is modelling behaviour on a spherical surface (the Earth) it makes more sense to use the curvilinear coordinate system. Unfortunately, some of the visualisation tools, in particular LAS prefers to work with data using a regular or rectilinear grid. Part of our workflow involves remapping the data from curvilinear to rectilinear using a tool called Ferret (also from NOAA). Ferret does a whole lot more than regridding (and is, in fact, used under the hood by LAS to generate a lot of the graphical output of LAS) but in our case, we’re interested mainly in its ability to regrid the data from one gridding system to another. Ferret is an interesting tool/language – an example of the kind of script required for regridding is this one from the Ferret examples and tutorials page. Did I mention we’re not Oceanographers? Thankfully, someone else prepared the regridding script, our job was to get it up and running as part of our work flow.

We’re nearly back to the origins of the title of this piece now, bear with me!

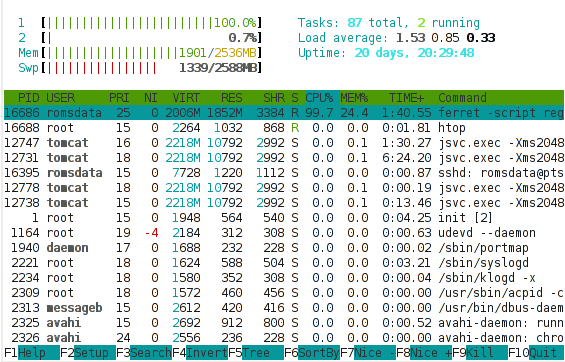

We’re using a VMware virtual server as a test system. Our initial deployment was a single processor system with 1 GB of memory. It seemed to run reasonably well with TDS and LAS – it was responsive and completed requests in a reasonable amount of time (purely subjective but probably under 10 seconds if Jakob Nielsen’s paper is anything to go by). We then looked at regridding some of the customer’s own data using Ferret and were disappointed to find that an individual file took about 1 hour to regrid – we had about 20 files for testing purposes and in practice would need to regrid 50-100 files per day. I took a quick look at the performance of our system using the htop tool (like the traditional top tool found on all *ix systems but with various enhancements and very clear colour output). There are more detailed performance analysis tools (include Dag Wieers excellent dstat) but sometimes I find a good high-level summary more useful than a sea of numbers and performance statistics. Here’s a shot of the htop output during a Ferret regrid,

What is interesting in this shot is that

- All of the memory is used (and in fact, a lot of swap is also in use).

- While running the Ferret regridding, a lot of the processor is being spent in kernel activity (red) instead of normal (green) activity.

High kernel (or system) usage of the processor is often indicative of a system that is tied up doing lots of I/O. If your system is supposed to be doing I/O (a fileserver or network server of some sort) then this is good. If your system is supposed to be performing an intensive numerical computation, such as here, we’d hope to see most of the processor being used for that compute intensive task, and a resulting high percentage of normal (green) processor usage. Given the above it seemed likely that the Ferret regridding process needed more memory in order to efficiently regrid the given files and that it was spending lots of time thrashing (moving data between swap and main memory due to a shortage of main memory).

Since we’re working on a VMware server, we can easily tweak the settings of the virtual server and add some more processor and memory. We did just that after shutting down the Linux server. We restarted the server and Linux immediately recognised the additional memory and processor and started using that. We retried our Ferret regridding script and noticed something interesting. But first, here’s another shot of the htop output during a Ferret regrid with an additional gig of memory,

What is immediately obvious here is that the vast majority of the processor is busy with user activity – rather than kernel activity. This suggests that the processor is now being used for the Ferret regridding, rather than for I/O. This is only a snapshot and we do observe bursts of kernel processor activity still, but these mainly coincide with points in time when Ferret is writing output or reading input, which makes sense. We’re still using a lot of swap, which suggests there’s scope for further tweaking, but overall, this picture suggests we should be seeing an improvement in the Ferret script runtime.

Did we? That would be an affirmative. We saw the time to regrid one file drop from about 60 minutes to about 2 minutes. Yes, that’s not a typo, 2 minutes. By adding 1 GB of memory to our server, we reduced the overall runtime of the operation by 97%. That is a phenomenal achievement for such a small, cheap change to the system configuration (1GB of typical system memory costs about €50 these days).

What’s the moral of the story?

- Understand your application before you attempt tuning it.

- Never, ever tune your system or your application before you understand where the bottlenecks are.

- Hardware is cheap, consider throwing more hardware at a problem before attempting expensive performance tuning exercises.

(With apologies to María Méndez Grever and Stanley Adams for the title!)

No comments yet.