Web 2.0 executive summary

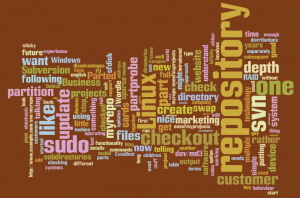

Neat applet from IBM, Wordle generated the following output from this blog’s RSS feed.

Update: Regenerated to address stattos’s concerns about the prescence of banks at the centre of the old one. I think this one might make a nice t-shirt image.

Converting Openoffice Calc spreadsheet to image

Just a quick tip, if you have a spreadsheet or part of a spreadsheet in Openoffice Calc which you want to convert to an image (for use in a web page or something).

I was initially dismayed to find that Export and Save As in Openoffice calc only support other spreadsheet formats, XHTML and PDF (to be fair, the XHTML is pretty clean compared to that output by Microsoft Office but it wasn’t what I wanted in this case).

After some playing around, I figured out a pretty easy way of converting my spreadsheet to an image. I highlighted the table in Openoffice calc and clicked on Edit / Copy. Then I started the GNU Image Manipulation Program (GIMP) – I guess other graphics programs should work equally well – and clicked on File / Acquire / Paste as New and voilà – the spreadsheet appeared in a new GIMP window ready to be saved in whatever graphics format you wish.

(I did this on a Linux system, I’d be curious to know if the same works on Windows).

Linux and the Semantic Web

I’ve recently (well, back in January, but it took me a while to blog about it) started working with the DERI Data Intensive Infrastructure group (DI2). The Digital Enterprise Research Institute (DERI) is a Centre for Science, Engineering and Technology (CSET) established in 2003 with funding from Science Foundation Ireland. Its mission is to Make the Semantic Web Real – in essence, DERI is working on both the theoretical under-pinnings of the Semantic Web as well as developing tools and technologies which will allow end-users to utilise the Semantic Web.

The group I’m working with, DI2, has a number of interesting projects including Sindice which aims to be a search engine for the Semantic Web and a forthcoming project called Webstar which aims to crawl and store most of the current web as structured data. Webstar will allow web researchers to perform large scale data experiments on this store of data, allowing researchers to focus on their goals rather than spending huge resources crawling the web and maintaining large data storage infrastructures.

Sindice and Webstar both run on commodity hardware running Linux. We’re using technologies such as Apache Hadoop and Apache HBase to store these huge datasets distributed across a large number of systems. We are initially working with a cluster of about 40 computers but expect to grow to a larger number over time.

My role in DI2 is primarily the care of this Linux infrastructure – some of the problems that we need to deal with include how to quickly install (and re-install) a cluster of 40 Linux systems, how to efficiently monitor and manage these 40 systems and how to optimise the systems for performance. We’ll use a lot of the same technologies that are used in Beowulf style clusters but we’re looking more at distributed storage rather than parallel processing so there are differences. I’ll talk a little about our approach to mass-installing the cluster in my next post.

Categories

Archives

- September 2010

- February 2010

- November 2009

- September 2009

- August 2009

- June 2009

- May 2009

- April 2009

- March 2009

- February 2009

- January 2009

- October 2008

- September 2008

- August 2008

- July 2008

- June 2008

- May 2008

- April 2008

- March 2008

- February 2008

- November 2007

- September 2007

- April 2007

- March 2007

- February 2007

- January 2007

- December 2006

- September 2006

- July 2006

- June 2006

- April 2006